Fight against facial recognition camera rollout set to reach High Court amid 'open prison' fears

Bev Turner agrues agaunst facial recognition cameras in the UK |

GB NEWS

The landmark case comes just weeks after Labour announced plans to expand the use of the controversial technology across Britain

Don't Miss

Most Read

Trending on GB News

The High Court is set to rule on whether facial recognition cameras are being used illegally in a landmark case that could force police across the UK to roll back their use, GB News can reveal.

It will be heard just weeks after the Government announced plans to expand the use of the controversial technology in Britain

The legal challenge against the Metropolitan Police has been brought by Silkie Carlo, director of the Big Brother Watch think-tank, and Shaun Thompson, who was held by officers in London Bridge after being misidentified by a facial recognition camera last February.

“It could be effectively a blanket cover of the whole city... effectively the whole city would feel like an open prison,” said Ms Carlo.

TRENDING

Stories

Videos

Your Say

The two-day hearing on January 27 and 28 will examine whether Scotland Yard is acting within the law when it deploys live facial recognition on the capital's streets.

It comes weeks after the Home Office confirmed it wants to widen the use of facial recognition and launched a 10-week public consultation that could pave the way for new legislation.

Facial recognition is currently used by eight police forces in England and Wales to hunt wanted suspects, as well as missing or vulnerable individuals.

But Labour argues the current legal framework does not give police “sufficient confidence” to use the technology at a greater scale.

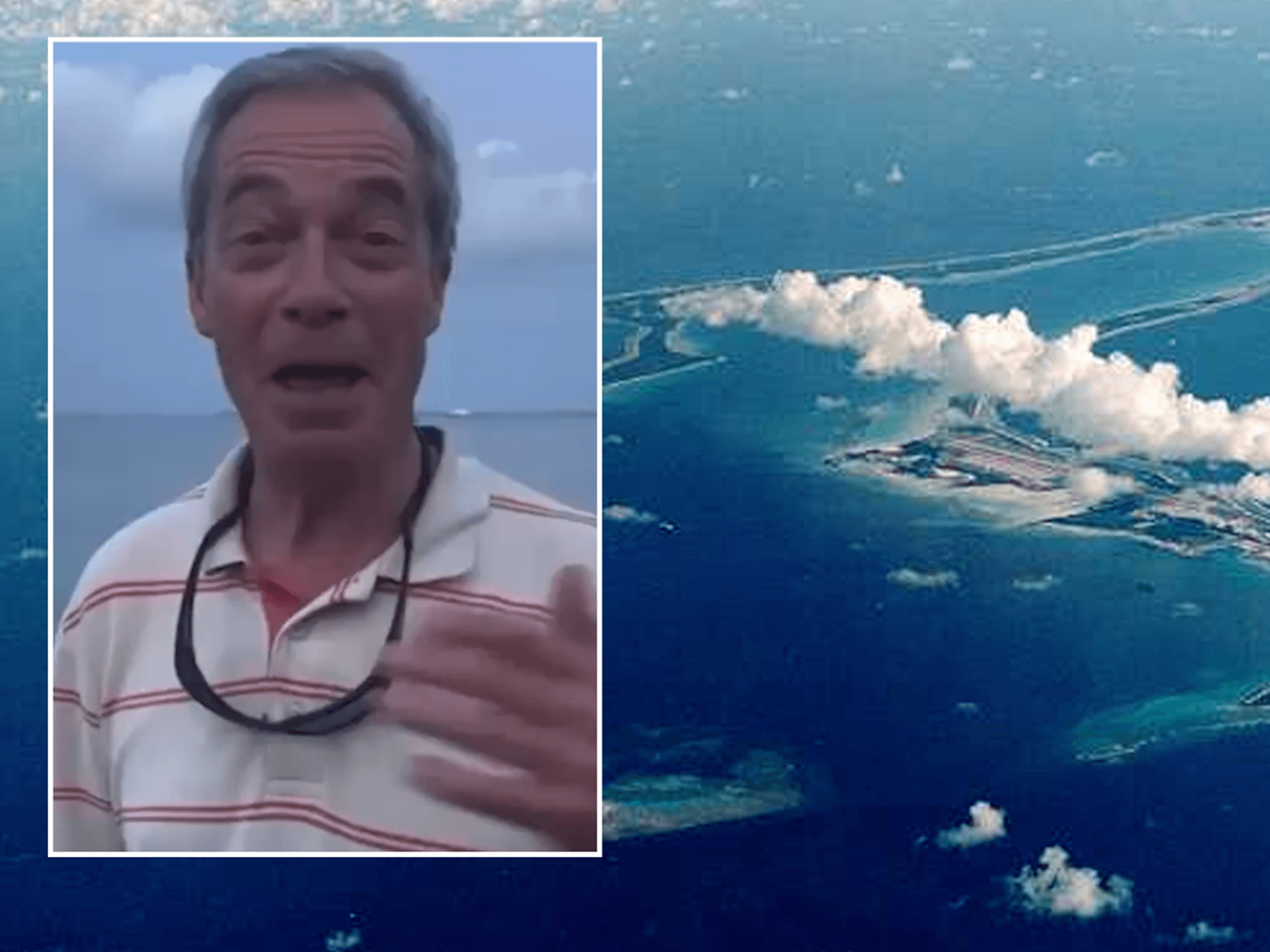

The Met has defended its mega facial recognition rollout

| PAA ruling against the Met could force forces nationwide to rewrite their policies, or push ministers to introduce legislation to regulate live facial recognition for the first time.

Judges will be asked to decide whether the Met’s current policy — which governs where the technology can be used, who can be placed on watchlists and how scans are carried out — complies with existing human rights laws.

Ms Carlo said: “It is a breach of privacy and freedom of expression rights.”

However, the challenge does not seek to ban facial recognition outright. Instead, it focuses on the scope of police powers under the current policy.

LATEST DEVELOPMENTS

Shaun Thompson was stopped by police outside London Bridge station

|BIG BROTHER WATCH

“This case could have ramifications for the rest of the country,” Ms Carlo said. “We are challenging the Met Police use of live facial recognition on the basis of where they can use it.”

Under existing guidance, the Met can deploy live facial recognition in crime hotspots, transport hubs such as train stations, and surrounding areas.

“Because they can use the cameras in so many places it becomes so broad that it is hard to find places that they cannot use it,” Ms Carlo said. “It could be effectively a blanket cover of the whole city.”

She added: “The UK is one of the most surveyed countries in the world in proportion of cameras to people and London is a more surveyed city than any other in the world apart from Beijing.”

What distinguishes live facial recognition from standard CCTV is it allows faces to be analysed and matched in real time using software layered onto existing camera networks.

“Live facial recognition is software that can be used with camera feeds which are already there,” Ms Carlo said. “A lot of infrastructure is already in place and can be quickly and easily turned into live facial recognition.”

She said both permanent and mobile deployments are already on the rise. Hammersmith and Fulham Council is upgrading its cameras to enable live facial recognition, while the Met has begun installing permanent systems in some areas.

“This is the first time cameras have been put up permanently in Croydon,” she said, adding the Met uses mobile units mounted on lampposts.

Despite the expansion, Ms Carlo says there is still no law written specifically to govern live facial recognition.

“Under the current system not a single law has the words ‘facial recognition’ in it,” she said. “The Government has not even tabled a debate on live facial recognition.”

Instead, police rely on existing legislation— including human rights and data protection laws — which campaigners argue were never designed to authorise real-time biometric surveillance of the public.

The legal case will argue such surveillance contravenes existing safeguards, particularly Article 8 of the Human Rights Act, which protects the right to privacy.

A central plank of the case concerns the risk of false matches.

In February 2024, Ms Carlo witnessed her co-claimant Mr Thompson, a 39-year old council worker, from Walworth, Southwark, being stopped by police after a facial recognition camera incorrectly flagged him as a criminal.

Despite not committing any offence, Mr Thompson was held by police for around half an hour. Officers demanded his fingerprints and insisted he provide identification. When he showed them a photograph of his passport on his phone, it was rejected as “not good enough”.

The facial recognition identification later proved to be wrong.

Mr Thompson said: “I was misidentified by a live facial recognition system while coming home from a community patrol in Croydon. I was told I was a wanted man and asked for my fingerprints even though I’d done nothing wrong.

“It felt shocking and unfair. I’m challenging this because people should be treated with respect and fairness, and what happened to me shouldn’t happen to anyone else.”

Concerns about accuracy are not new. Early police trials of live facial recognition — including deployments at Notting Hill Carnival — produced false alerts.

The technology has already been the subject of successful legal challenges, including a case brought by Ed Bridges, supported by the pressure group Liberty, which found the use of facial recognition cameras in Wales unlawful due to failures in privacy and equality safeguards.

Campaigners argue these cases prove errors and legal shortcomings are not isolated incidents but recurring risks as the technology expands.

Ms Carlo will also suggest the technology risks discouraging lawful protest and public assembly.

“If you have mass surveillance, it eats away at the right to freedom of expression,” Ms Carlo said. “If you want to go to a protest or march you will be worried that you will be in a permanent police line-up.”

Ms Carlo admitted the Met has tightened its policy since then, but said it remains too wide.

“They said they’ve changed their policy and would tighten it up, but it’s still very broad,” she said.

The Home Office has confirmed further investment in the technology, including funding for live facial recognition and a national facial matching service.

Policing minister Sarah Jones said a wider roll-out could mark "the biggest breakthrough" in catching serious offenders since DNA analysis.

Any legislation informed by a public consultation could take up to two years to pass.

Our Standards: The GB News Editorial Charter

More From GB News