Scammers want your 'money and data' Britons warned as Willy Wonka event disaster blamed on AI ads

‘It will soon be almost impossible for the average person to spot AI creations’, said Joe Davies

Don't Miss

Most Read

Latest

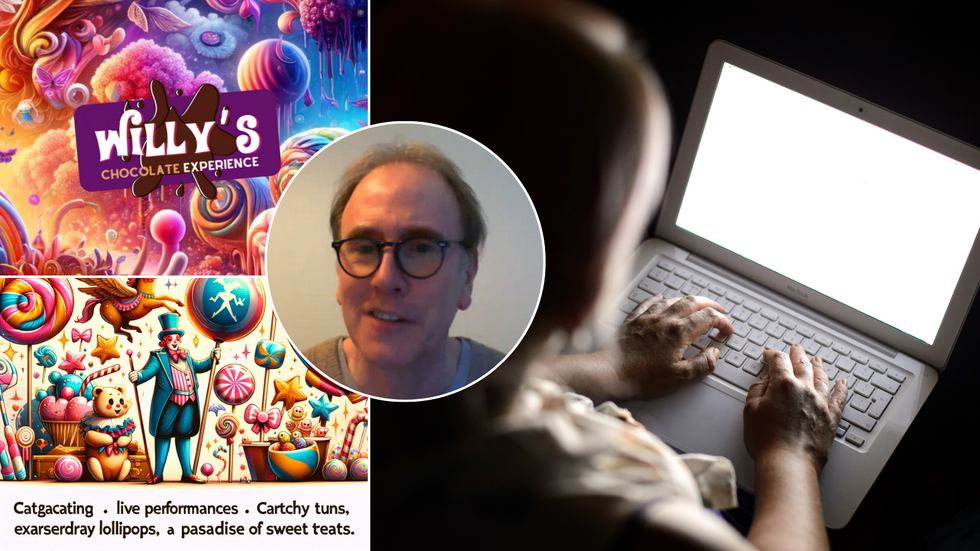

Britons “need to exercise due diligence” about AI, experts have warned, in the wake of the infamous ‘Willy’s Chocolate Experience’ event which duped hundreds of guests out of money by using AI-generated ads.

The event, which went viral on social media, left attendees furious – and despite promising a “wondrous and enchanting” Charlie and the Chocolate Factory-inspired day out, delivered an “empty warehouse with hardly anything in it”.

Thanks to its virality, the event sparked discussions about AI images and their potential for use in online scams, even prompting an official complaint arguing it used misleading ads – which the Advertising Standards Authority (ASA) said it was reviewing.

With this in mind, GB News spoke to industry experts to find out just what Britons need to watch out for when it comes to artificial intelligence online.

David Emm said AI images could help online scammers come across as more legitimate than they would have before

|GB News/PA/House of Illuminati

Speaking to GB News, David Emm, principal security researcher at cyber-security firm Kaspersky, warned that the tech scams which might have once been used to target high-level figures can now leave everyday people at risk.

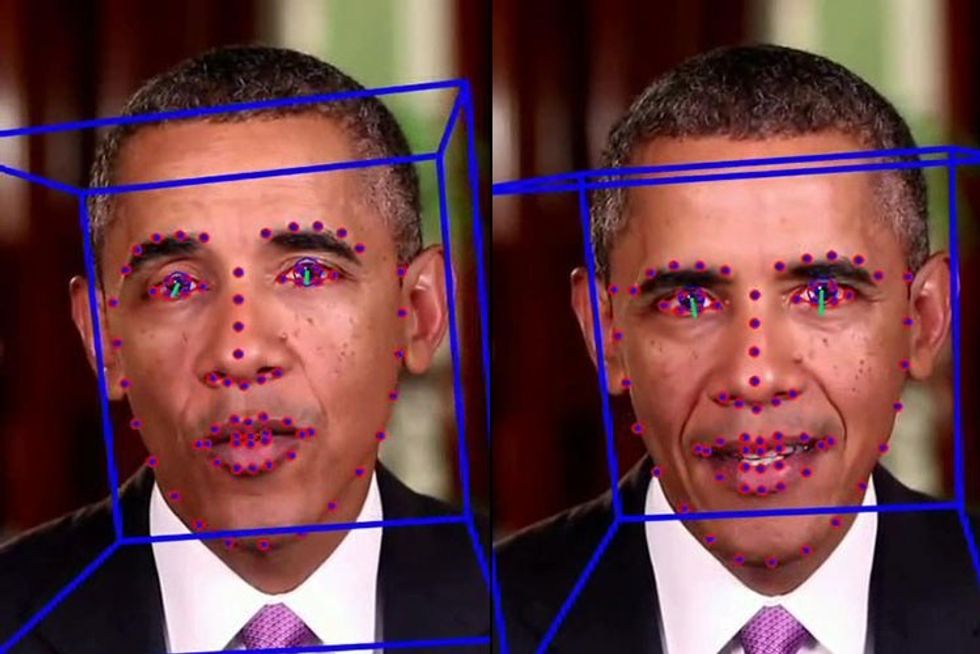

He pointed to ‘deepfakes’, i.e., fake videos or recordings of people designed to impersonate them without their consent, as one way scammers could trick regular people into parting with their money – like an advanced form of traditional ‘phishing’ scams.

Emm said: “Now the technology is getting to the point where somebody could create a deepfake with a fake persona and a fake picture.

“Even a couple of years ago, that would have been the preserve of people who were prepared to spend the time, money and effort for a targeted attack.

LATEST DEVELOPMENTS:

Willy's Chocolate Experience looked much more "professional" online due to its AI-generated "spectacular graphics"

|House of Illuminati/X

“People who otherwise wouldn’t see any return on investment in phishing or didn’t have the skills might think ‘well, if I can get ChatGPT or equivalent to do it for me, then great’.”

He said AI was a “useful addition in the armoury of the people who are already involved” in online crime, and warned that it was already in use in dating scams, where entire personalities can be artificially generated.

Emm was keen to talk up the positives of AI – he said it was “incredibly useful” as a tool for businesses to help with number-crunching, advertisers to do some of the grunt work in copywriting and noted it can be “used by individuals for fun” – but he highlighted the risks, too.

Emm said the starting point for online scammers is to trick a potential victim into doing something – and AI can help them look more credible on that first approach.

He compared another misleadingly-sold event, ‘Lapland New Forest’ in 2008, where Father Christmas was punched in his grotto by a furious parent, to ‘Willy’s Chocolate Experience’ – the former was advertised with a “line drawing”, while the latter, looked much more “professional” due to its AI-generated “spectacular graphics”.

Joe Davies, founder of digital marketing agency FatJoe, told GB News: “AI has substantially lowered the barriers to creating misleading or falsely advertised content."

Davies said part of AI’s appeal to advertisers and marketers is how quick and cheap it is to use, meaning “sham events” like the one in Glasgow would only get more common.

Davies said: “If AI continues along the current trajectory, I believe it will soon be almost impossible for the average person to spot AI creations.”

He warned consumers to stay AI-savvy and said, if in doubt, to check for weird details like “extra fingers” or “melting buildings”, odd-looking textures, nonsensical backgrounds or “complete gibberish” instead of text.

An ASA spokesperson said: “We’re aware that AI-generated material is increasingly appearing in ads. While this technology could provide innovative opportunities for advertisers, there are also risks, and it’s important they use the technology responsibly.

“Our rules make it clear that all ads, regardless of how they were made, must be legal, decent, honest and truthful.”

It said advertisers must not use AI to mislead consumers, exaggerate products or generate “harmful or irresponsible material”.

'Deepfake' scammers could now use AI to target everyday people rather than high-profile individuals like politicians and celebrities, David Emm warned

|UC Berkeley

While current ASA guidelines are not directly targeted at AI – or any other specific kind of tech, for that matter – it said it was looking into adapting its guidance “in the near future” to help advertisers use it more responsibly.

David Emm said: “The game hasn’t changed in terms of what an attacker is going to try and do – they want you to part with your data, your money, your login credentials, to lure you to a fake site or to get you to do something which will cause you harm.

“The key thing as an individual is just to exercise caution – whether it’s a Black Friday deal or an event like the Wonka one.

“We’re all brought up to stop and look before we cross the road, so it’s about getting a sort of digital equivalent – a digital security consciousness… As consumers, we need to exercise some due diligence.”